Hello,

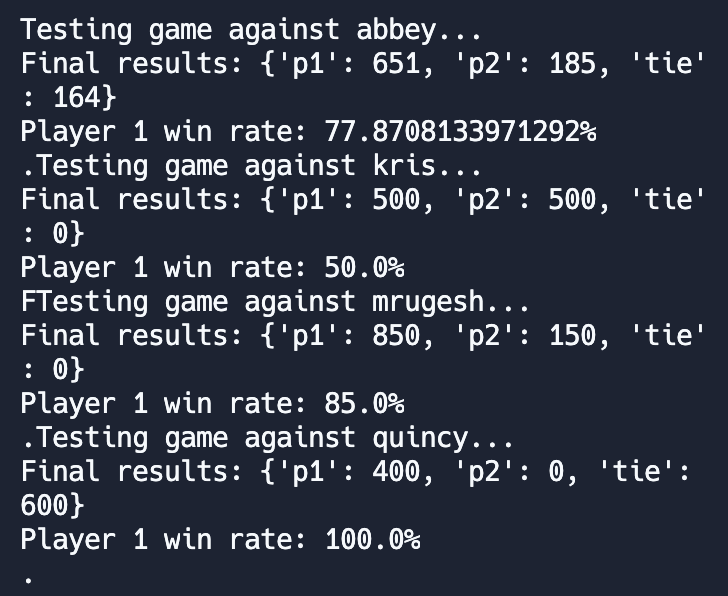

I am having some inconsistencies in whether my code passes for the RPS project. Here are some examples:

vs

I didn’t notice this until after I submitted, unfortunately, so I’d like to clear it up quickly in case I get audited. I am wondering what could be causing this type of performance.

Here is my code:

import numpy as np

import random

def player(prev_play, opponent_history=[], self_history = [], Q=np.zeros((3,3,27))):

opponent_history.append(prev_play)

op_hist= opponent_history.copy()

original = True

while original:

try:

op_hist.remove('')

except: break

ideal_response = {'R' : 'P', 'P':'S', 'S':'R'}

guess = 0

# Update Q table

states = ['RRR' , 'RRP', 'RRS', 'RPP', 'RPR', 'RPS', 'RSS', 'RSP', 'RSR',

'PRR' , 'PRP', 'PRS', 'PPP', 'PPR', 'PPS', 'PSS', 'PSP','PSR',

'SRR' , 'SRP', 'SRS', 'SPP', 'SPR', 'SPS', 'SSS', 'SSP', 'SSR'

]

actions = ['R', 'P', 'S']

plays = ['R', 'P', 'S']

if len(op_hist) > 4:

# previous three opponent moves before my last move

last_three = ''.join(op_hist[-4:-1])

last_state = states.index(last_three)

last_action = self_history[-2] # my second last play

action = actions.index(last_action)

last_play = self_history[-1] # my last play

play = plays.index(last_play)

last_opponent_play = op_hist[-1]

if last_play == ideal_response[last_opponent_play]: # if I won

Q[play, action, last_state] += 1

elif last_play == last_opponent_play: # if I tied

Q[play, action, last_state] += 0

else:

Q[play, action, last_state] += -1 # if I lost

# If at least 50 plays done, then check for patterns

if len(op_hist) >=50 :

# Check for patterns in their responses

string_responses=''

for i in range(50):

string_responses = string_responses + op_hist[-i]

maximum = 0

for pattern in ['RR','SS','PP']:

maximum = maximum + string_responses.count(pattern)

# If a pattern >= 60% of the time, play what beats it

if maximum >= 15 :

for pattern in ['RR','SS','PP']:

if op_hist[-1] == pattern[0]:

predicted_next = pattern[-1]

guess = ideal_response[predicted_next]

original = False

elif maximum < 15 :

# Check for patterns dependent on previous self throw

countRP = 0

countSR = 0

countPS = 0

for i in range(1,50):

throw=self_history[-i]

response=op_hist[-i+1]

if (throw == 'R') & (response == 'P'):

countRP = countRP + 1

elif (throw == 'S') & (response == 'R'):

countSR = countSR + 1

elif (throw == 'P') & (response == 'S'):

countPS = countPS + 1

patterns= {'RP': countRP, 'SR':countSR, 'PS': countPS}

#If pattern exists >60% of the time, play what beats it

if sum(patterns.values()) > 30:

for pattern in patterns.keys():

if pattern[0] == self_history[-1]:

predicted_response = pattern[1]

guess=ideal_response[predicted_response]

original = False

# If no patterns, play based on Q table

if ((len(op_hist) > 20) & (original is True)) :

direct_last_three = ''.join(op_hist[-3:]) # last three opponent moves

current_state = states.index(direct_last_three)

current_action = actions.index(self_history[-1]) # my previous play

i = np.argmax(Q[:, current_action, current_state]) # most likely prev + next move that results in me winning

guess = plays[i]

# Random guess up to 20

elif len(op_hist) <= 20:

guess = (random.sample(plays,1))[0]

self_history.append(guess)

return guess

The latest part I added was the Q table (I know it’s not really a Q learning table, but I am calling it that for lack of a better name). Before this part, it consistently passed the everyone except abbey (consistent failure).

I thought I added the Q table strategy in such a way that it would only affect the games against abbey, since the other methods are used if they work (>60% of the time). But, now it is sometimes failing kris, and also sometimes failing abbey. Also, the win rates are varying by >15% for abbey, and > 50% for kris. The other two players are still consistently passing.