Hey,

So I have this model which is pretrained by Google and also trained on a tfds dataset:

import matplotlib.pyplot as plt

import tensorflow as tf

import tensorflow_datasets as tfds

keras = tf.keras

# split the data manually into 80% training, 10% testing, 10% validation

(raw_train, raw_validation, raw_test), metadata = tfds.load(

'cats_vs_dogs',

split=['train[:80%]', 'train[80%:90%]', 'train[90%:]'],

with_info=True,

as_supervised=True,

)

"""""

Since the sizes of our images are all different, we need to convert them all to the same size.

We can create a function that will do that for us below.

"""""

IMG_SIZE = 160 # All images will be resized to 160x160

def format_example(img, lab):

"""

returns an image that is reshaped to IMG_SIZE

"""

img = tf.cast(img, tf.float32)

img = (img / 127.5) - 1

img = tf.image.resize(img, (IMG_SIZE, IMG_SIZE))

img = tf.expand_dims(img, axis=0)

return img, lab

# Now we can apply this function to all our images using .map().

# .map() takes every single example in raw_train, raw_validation, etc and applies the function to it

train = raw_train.map(format_example)

validation = raw_validation.map(format_example)

test = raw_test.map(format_example)

BATCH_SIZE = 32

SHUFFLE_BUFFER_SIZE = 1000

# Finally we will shuffle and batch the images

train_batches = train.shuffle(SHUFFLE_BUFFER_SIZE).batch(BATCH_SIZE)

validation_batches = validation.batch(BATCH_SIZE)

test_batches = test.batch(BATCH_SIZE)

IMG_SHAPE = (IMG_SIZE, IMG_SIZE, 3)

# Create the base model from the pre-trained model MobileNet V2

base_model = tf.keras.applications.MobileNetV2(input_shape=IMG_SHAPE,

include_top=False, # we don't want to load the top layer

weights='imagenet')

base_model.trainable = False

"""""

Adding OUR Classifier

"""""

global_average_layer = tf.keras.layers.GlobalAveragePooling2D()

# Finally, we will add the prediction layer that will be a single dense neuron. We can do this because we

# only have two classes to predict for.

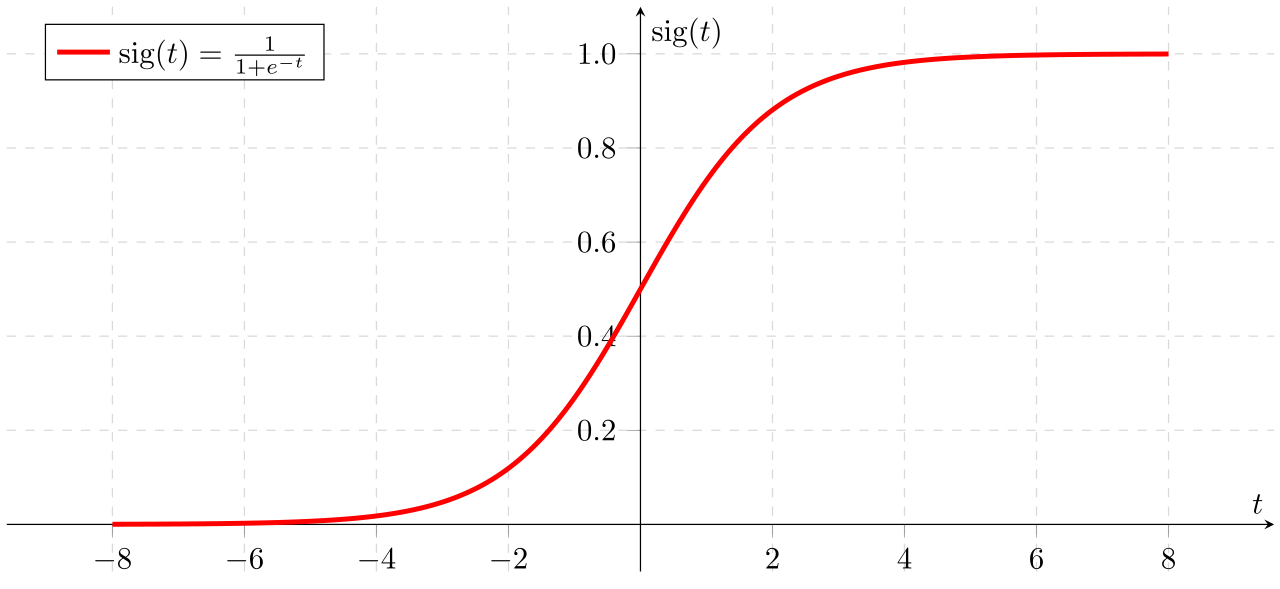

prediction_layer = keras.layers.Dense(1, activation='sigmoid')

# Now we will combine these layers together in a model.

model = tf.keras.Sequential([

base_model,

global_average_layer,

prediction_layer

])

# TRAINING THE MODEL

"""""

Now we will train and compile the model. We will use a very small learning rate to ensure that the model

does not have any major changes made to it.

"""""

base_learning_rate = 0.0001

model.compile(optimizer=tf.keras.optimizers.RMSprop(lr=base_learning_rate),

loss=tf.keras.losses.BinaryCrossentropy(from_logits=True),

metrics=['accuracy'])

# Now we can train it on our images

initial_epochs = 10

history = model.fit(train_batches,

epochs=initial_epochs,

validation_data=validation_batches)

acc = history.history['accuracy']

print(acc)

# you can save a model and load a model with this syntax so you don't have to train it again every time

model.save("dogs_vs_cats.h5") # we can save the model and reload it at anytime in the future

new_model = tf.keras.models.load_model('dogs_vs_cats.h5')

predictions = new_model.predict(test)

print(predictions)

get_label_name = metadata.features['label'].int2str # creates a function object that we can use to get labels

i = 0

for image, label in raw_train.take(6):

plt.figure()

plt.imshow(image)

plt.title(get_label_name(label))

print(get_label_name(label))

print(predictions[i])

i += 1

Anyway, I am getting this output and I don’t know how to use it or understand it:

dog

[0.33223754]

dog

[0.48130825]

dog

[0.70762724]

cat

[0.26925486]

dog

[0.49678028]

dog

[0.45581067]

In the code, I print the label name and the prediction beneath it, but i dont understand what the number inside the array means and how to use it. I would apreciate it if someone could explain.

Thamk you!